Introduction

alsats is an open source project that aims to reduce the investment (in time and money) needed to create minimum viable datasets for supervised machine learning.

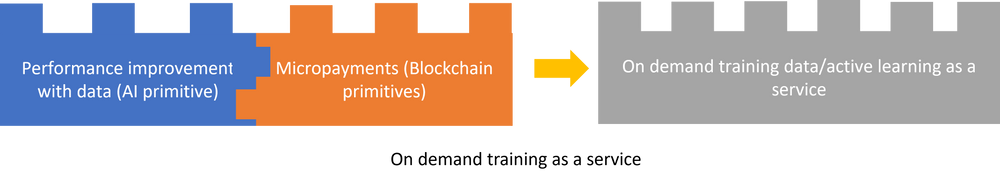

In Idea Legos for Web3XAI, I described how the AI primitive of performance improvement with data can be combined with the public blockhain primitive of micro/nano-payments with finality to create per-sample training as a service.

alsats is an attempt at an implementation of this Idea Lego. It combines Active Learning for intelligent labeling of training data for supervised machine learning with the ability to charge, pay and immediately settle arbitrarily small amounts (starting from 1 milisatoshi, which is about two-tenths of a millionth of a USD at today's prices) on the Lightning Network.

Why Active Learning for labeling? From the Wikipedia Active Learning page:

There are situations in which unlabeled data is abundant but manual labeling is expensive. In such a scenario, learning algorithms can actively query the user/teacher for labels. This type of iterative supervised learning is called active learning. Since the learner chooses the examples, the number of examples to learn a concept can often be much lower than the number required in normal supervised learning...

Why use a blockchain layer 2 network such as Lightning instead of legacy payment rails? Enabling frictionless per-sample/per-compute payment requires the ability to transact and track arbitrarily small monetary value, instant settlement and extremely low (potentially nearly free) transaction costs and extreme scalability. The Lightning Network scores high on all of these requirements while legacy payment rails do not.

Features

alsats has the following features:

- API based model training and data labeling.

- Intelligent labeling - Label training examples based on model uncertainty predictions for said examples.

- Iterative Learning - Learn models starting with as little as one data point. As more data is labeled and trained, model metrics and labeling suggestions improve.

- Flexible payments - Pay ONLY for the compute requested in the process (i.e. pay for as little as a few milisats per training/label iteration).

- Train and label trust-lessly - No need to register/sign-up for an account.

- Data security - alsats doesn't store any user data in it's current implementation. Future implementations will store data only if the customer wants to.

Architecture

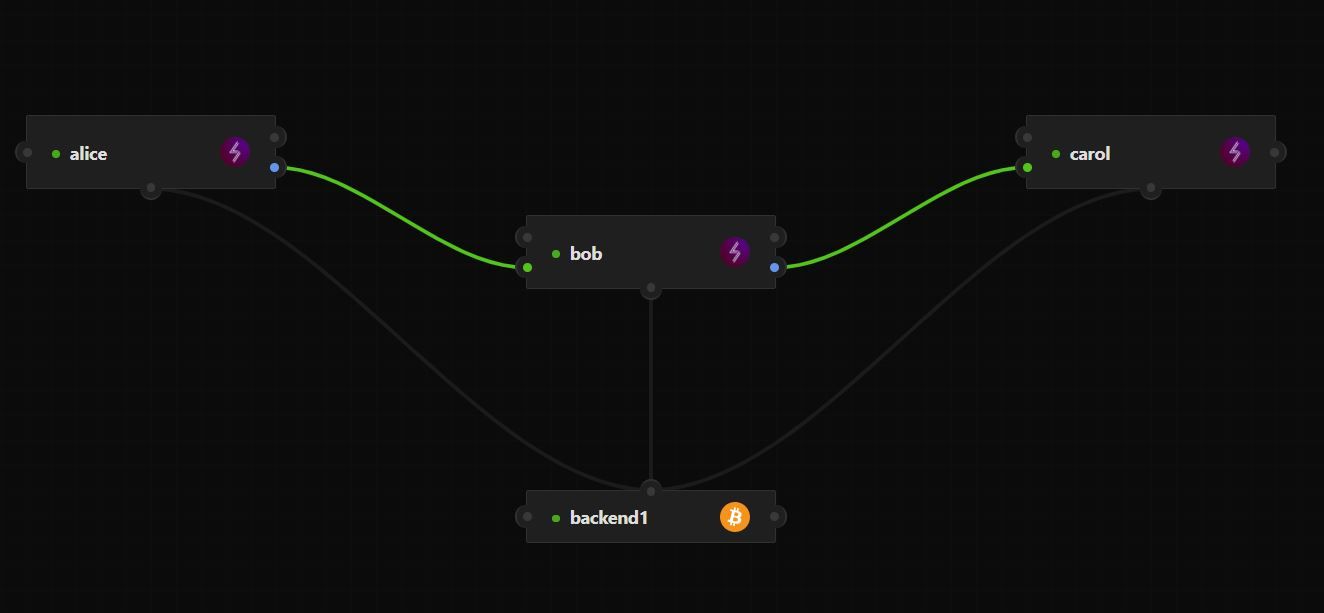

alsats uses a HTTP client-server based architecture. Both the client and the back-end server run Lightning Network Daemon (LND) nodes. The back-end exposes REST API endpoints to clients. Clients open outgoing Lightning channels with the server, either directly, or through other LND nodes connected to the server LND.

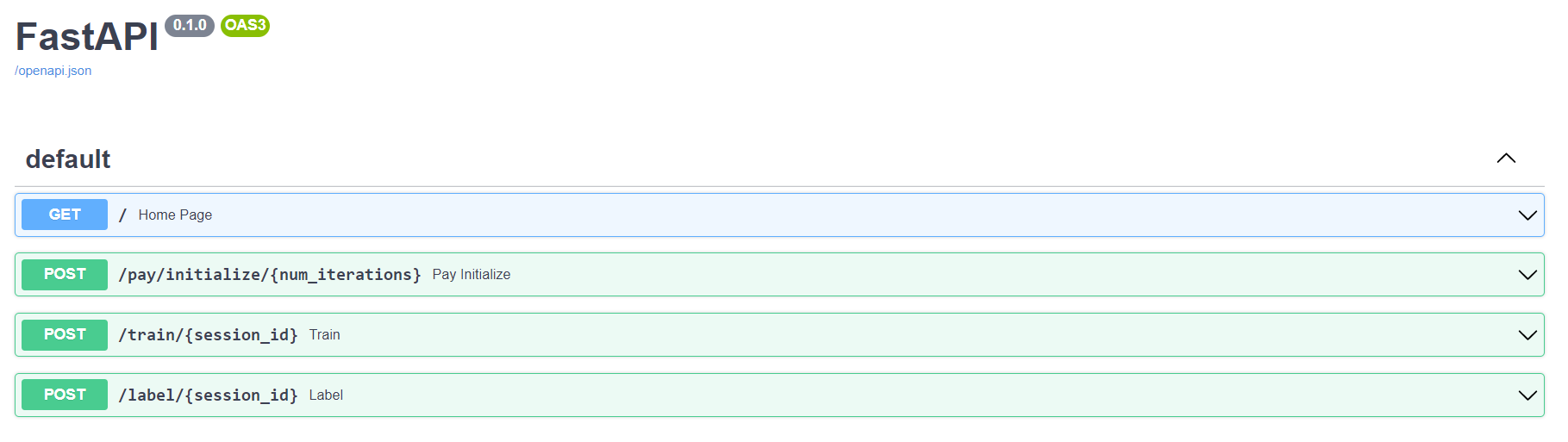

Currently, three endpoints are implemented.

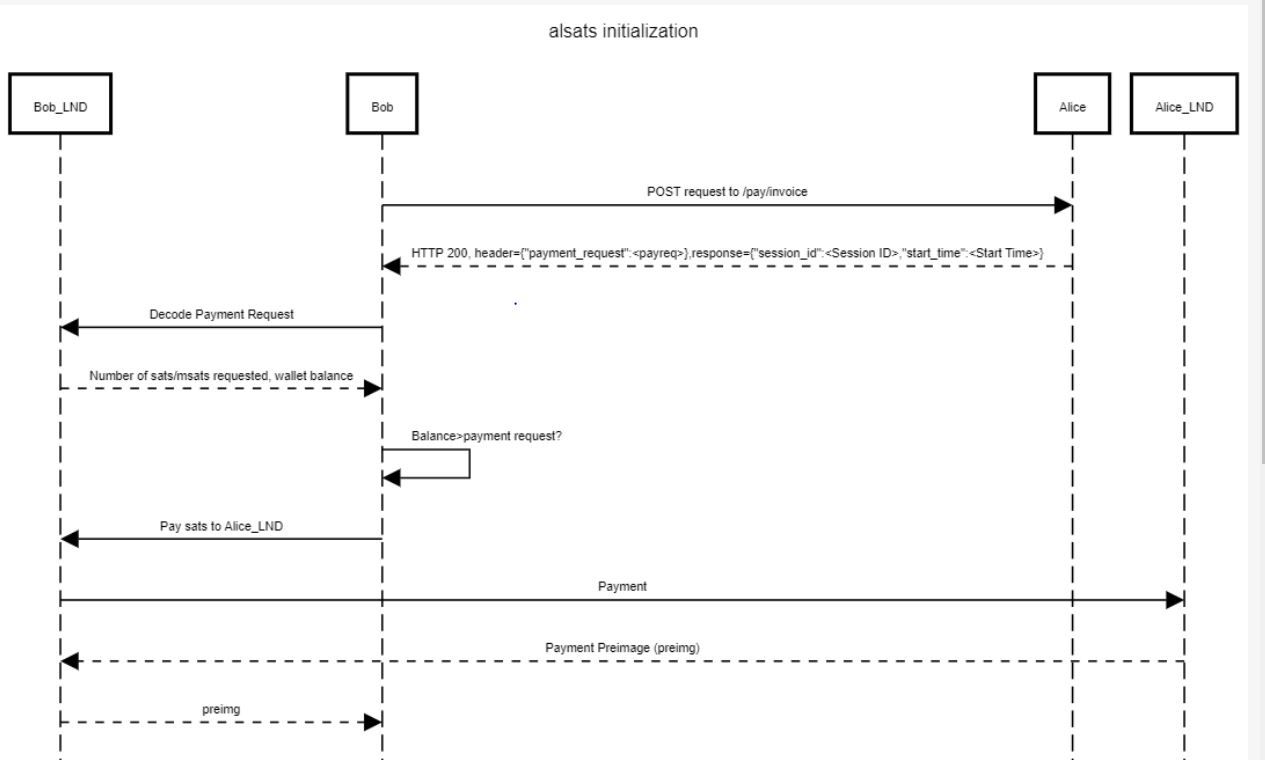

- /pay/initialize: This endpoint allows users to initialize an Active Learning session with a desired number of Active Learning iterations. A Lightning payment request is sent in the response header along with the session ID that tracks the active learning session.

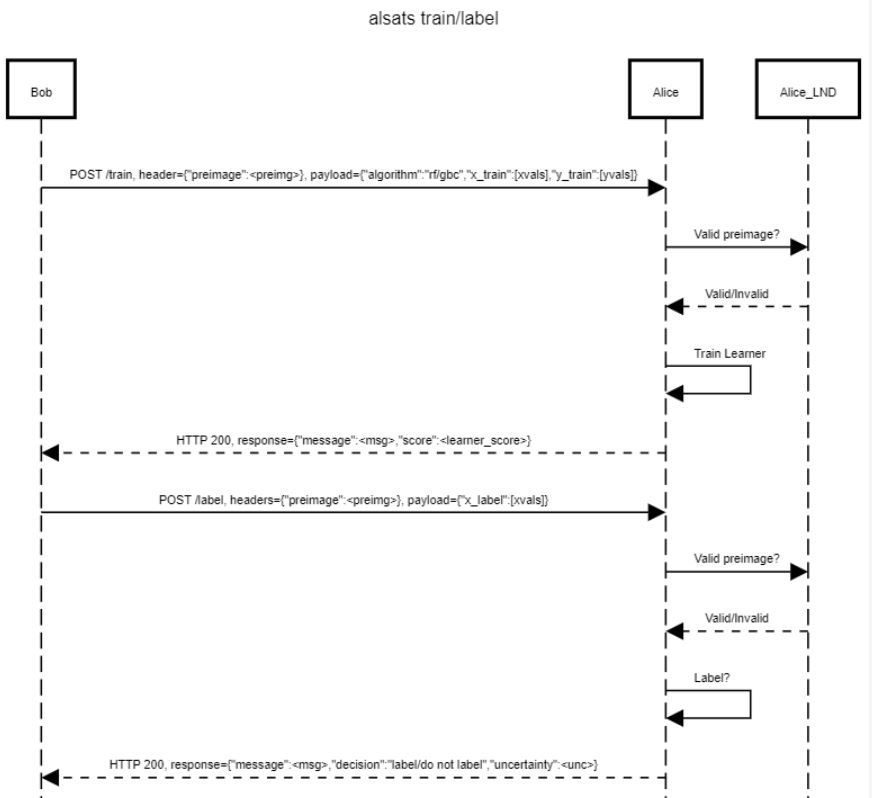

- /train: Upon payment of the payment request, clients hit the /train endpoint with a header containing the payment preimage and data that is used to train the underlying Active Learner. The server retains the payment hash for the payment request generated in the initialization step. Upon receipt of the payment preimage, it is hashed and compared to the stored payment hash to decide if the training request is legitimate. This implicit authorization allows clients to request for training/labeling without requiring to register/sign-up. This also allows alsats to operate without storing user data.

- /label: A trained Active Learner can be queried for a label/no-labeling-needed decision.

Demo

The endpoints described above form the basis for a complete API-based Active Learning framework that allows users to iteratively train and label data while paying as little as a few milisats only for the compute requested. alsats does not need to retain training or labeling data. The underlying Active Learner can be trained without requiring user data to be stored.

The demo below is an example of the use of alsats in reducing the number of labeled points in reaching a ~90% classification accuracy on the classic MNIST dataset on an unoptimized, default Random Forest classifier as implemented in scikit-learn.

The full MNIST dataset used in this demo has ~70K data points. As the demo shows, the classifier reaches an out-of-sample rolling classification accuracy of ~90% in ~2500 train + label iterations i.e. just ~1250 labeled data points.

Outlook

alsats is under active development and has been released under the MIT license at https://github.com/antaraxia/alsats. A number of important short-term development and adoption milestones remain:

- Creating a full-fledged, hosted alsats service on testnet LND and BTC.

- Encouraging Data Scientists to beta-test alsats once fully-hosted.

- Switching alsats on to LND and BTC mainnet.

- Active Learning improvements - more complete proofs of concept for resource savings using alsats.

- Implementing PyTorch and Keras Learners in addition to the Random Forest and Gradient Boosted classifiers currently implemented.

- A POC for image labeling in alsats.

Acknowledgments

- Tivadar Danka, for the fantastic modAL Active Learning Package.

- Richard Blythman from Algovera, for periodically reviewing progress on alsats and making helpful suggestions.